I learnt this interesting technique while teaching a session for a management consultancy.

The question is to investigate the viability of a market – this can be learnt through survey data! But in order for our survey results to be useful, they should match the general “profile” of the country. This can be driven through demographic profiling such as age and gender. Ideally your sample should be already reflective i.e. if 20% of your population of respondents are women aged 18-25, then so should your survey responses.

But this isn’t always so easy to achieve!

The next best thing is to weight your responses. This only works if you already have some population stats on your audience.

Say for instance we want to understand the market for a new Poutine product. Given its a regional speciality, the majority of our customers will probably be Canadian.

True population

| Country | % of population |

| Canada | 0.7 |

| Italy | 0.1 |

| Ireland | 0.1 |

| UK | 0.1 |

Here’s a profile of the folks who have responded to our survey

Our Sample

| Country | Name | % of population |

| Canada | Emily | 0.2 |

| Canada | Nai | 0.2 |

| Italy | Bene | 0.2 |

| Ireland | Kevin | 0.2 |

| UK | Ben | 0.2 |

If we profile our data, we see the profiles don’t match at all! But what can we do- getting survey samples to exactly match our population is time consuming and expensive.

| Country | % of sample | % of population | |

| Canada | 0.4 | 0.7 | << In our sample, 40% of respondents are Canadian. But our true market population will be 70%! |

| Italy | 0.2 | 0.1 | << Also our market has less Italians, Irish and British actually over-represent the actual scenario |

| Ireland | 0.2 | 0.1 | |

| GB | 0.2 | 0.1 |

But even if our sample is not representative of our population, we can create a new column that helps us redistribute the weighting and help us extrapolate correct trends to the country level.

- Prepare look up tables with population proportions calculated

- Create a weight index–

- Calculation is [% of population] / [% of sample]. This allows us to scale up demographics of our samples that are under-represented in our data.

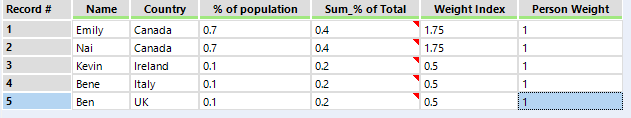

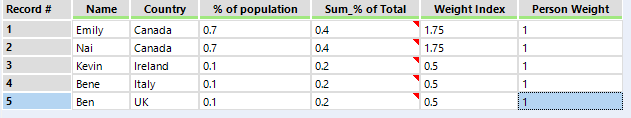

- The data comes out as so :

The Weight Index and Person Weight columns are the key parts of this technique. When summing up both columns, both total to 5 (as we have 5 respondents) but the weight index allows us to proportion more of our results to reflect the demographics we’ve matched against originally.

I’ve attached a sample here if you’re interested in taking a review.

I hope you’ll get to use this technique with your next survey data use case!